The rise of artificial intelligence (AI) has caused universities to wonder about how to utilize it or ask if they should even allow students to use AI at all. Biola has been at the forefront of Christian universities exploring AI in a way that aligns with Biblical morals. The university has been cited in several publications, including Fox News, regarding its work with AI and the AI Lab.

CHRISTIAN APPROACH TO AI

Yohan Lee, associate Dean of Technology, offered insights into how the Christian faith determines Biola’s approach to AI.

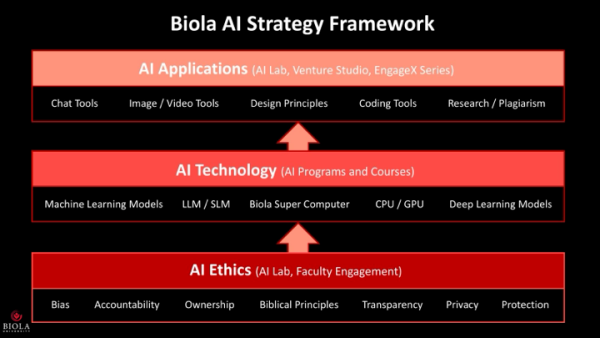

“Our Christian faith carefully contemplates how we define our AI Strategy Framework by explicating how we define AI Ethics as the first foundation,” said Lee. “At the Biola AI Lab, we enumerate that Bias, Accountability, Ownership, Transparency, Privacy and Protection are all evaluated by our Biblical Principles.”

Biola’s Student Handbook on Academic Integrity reflects these values, dedicating a section to provide guidelines for the use of AI. It emphasizes that the permissible use of AI in classes varies depending on the instructor and that, in all cases, citation is necessary.

“Even if the use of generative artificial intelligence such as ChatGPT, Elicit, or other text, graphic, video, or audio generators is expressly allowed by your instructor, using this technology without acknowledgment, citation, or attribution is plagiarism,” the handbook states.

Lee went on to explain the importance of Christian universities learning about and working with AI.

“Use, reliability, and the means to determine if AI output is valuable and actionable is likely to become a workplace norm. An interesting side effect of the general availability of AI has been its ability to challenge those with a secular worldview on what it means to be uniquely human,” said Lee. “Rather than diminishing the Christian perspective, it has provided a wonderful opportunity to focus on the value of Christian beliefs and raise the precious value of humanity to audiences that were previously unreceptive.”

Stefan Jungmichel, a Biola business alumnus and the current program’s co-founder and manager of the AI Lab, added that Christians do not currently have a large influence in the way people approach AI and its progress.

“I can tell you how the U.S. is doing it. Christians don’t have anything to do with what the US is currently doing,” said Jungmichel.

He added that the nation and administration are racing to back companies, which are, in turn, racing towards the best AI they can get.

“They throw all the money in the world and all the resources in the world onto creating really good AI. Why? Well, because there are geopolitical tensions,” said Jungmichel. “And then the next thing is, if you actually figure it out, really good AI is going to help your economy a lot. So that’s why they’re doing it.”

A DEFINING LINE

Lee also described the difference between a Christian approach to AI and a secular one.

“Secular approaches often begin with the notion of making products conform to accepted cultural norms of the user audience. This pluralistic approach has difficulties defining what is ‘good,’ ‘evil,’ ‘acceptable,’ ‘inclusive,’ and ‘ethical,’” said Lee.

He added that this means that these cultural norms are subject to change depending on who is defining the terms. This could lead to trouble concerning what it means for something to be true.

“Another challenge is that secular approaches struggle to explicate whether there is absolute truth, thus, fact-checking and reliability become challenging,” Lee said.

BENEFITS FOR ALL

Despite these differences, Lee believes that AI is helpful for all kinds of universities.

“Numerous efficiencies can be gained from repetitive and generative tasks. Current AI tools also provide a democratization of producing text, summaries, images, videos, and audio, which require subject matter expertise for high-quality artifact production,” said Lee. “Now, these capabilities can be made easier, and in most cases at dramatically reduced cost and time.”

Jungmichel said that while the rest of society sees AI as an opportunity to boost the economy, Christians consider it an aid for missions and ministry.

Lee added that these specific areas where AI is especially beneficial to Christian universities like Biola.

“Currently, AI can help Christian universities with reducing time and cost for administrative workflows, content development and some thoughtful assessment and pedagogy,” said Lee. “In addition, these technologies can help with areas such as foreign language translation, transliteration, and exposition of material that may be restricted to one language group or another. As the unreached population remains large and hungry for pedagogy in their native tongue, this represents an opportunity to expand critical thought and resources more broadly in a missional context.”

Jungmichel also said that in addition to rapid translation, AI could help with disaster prevention. He provided an example, describing an AI system that could take weather data and provide high-quality models that could warn people of natural disasters before they even occurred.

“So before natural disasters actually happen, we can already say, ‘This percentage of probability that will happen here at this point in time. So we have to evacuate if something happens.’ Then we can have fully autonomous disaster reactions,” said Jungmichel.

He added that AI could also send medical supplies and other resources immediately after a disaster occurs by means of drones.

Jungmichel also talked about the work done by the AI Lab at Biola.

“We explore a lot of safe AI measurements,” said Jungmichel. “What I think is a really good way how the AI lab can proceed forward is to make sure that AI systems are safe, but not because we trust them, but because we verify them and we prove so.”

He talked about exploring various safeguards to try and control AI systems like blockchains and how the AI Lab is currently researching the more theoretical aspects of artificial intelligence, since the technical side involves multi-billion-dollar projects.

“We don’t have the resources for that stuff (technological research and resources), but what we can do is we can be a light and communicate,” said Jungmichel. “I think our chance in the AI lab is to make sure that Biola is a beacon and a place where AI is used responsibly, and where Christian ideas are flourishing through the use of AI, but also that students are prepared.”

His vision for Biola is to inspire someone with the resources to help Biola advance its research on AI through pioneering and demonstrating that it is essential to engage with AI to understand it better.

DANGERS AND URGENCY

While AI is certainly useful, it is still something to be cautious about since it is a technology constantly evolving and being explored. Like all tools, AI is dangerous when left unchecked, since its services can be abused. Lee expanded on these dangers.

“AI technologies available to the general public improve by receiving publicly provided content.

There is a risk of exposure to unintended general public audiences when proprietary content or content that identifies a student is entered into these systems. Academic integrity will always be an issue,” said Lee. “But more importantly, the benefit of schools like Biola is the development of critical reasoning, symbolic reasoning, and creativity. If students rely on AI instead of developing those faculties themselves, they will miss a critical period of their development as mature adults and as productive members of the workforce.”

Jungmichel added that the reach of AI is far beyond what most people think.

“AI goes far beyond cheating in exams,” said Jungmichel. “I mean, we are talking about total displacement of jobs in general.”

He explained that this had to do with the exponential development of AI, not only within the past decade, but the past year. Jungmichel said that in mid-2024, AI reasoning models were discovered, and after testing, they proved to be smarter than humans. He said that the main danger is that no one ever knows if AI is on their side.

He went on to describe which jobs are in danger of being replaced by AI.

“Generally, rule of thumb, anything you can do on a computer, [AI] replaces. If you think about it, that’s a majority of jobs,” said Jungmichel. “I know I’m speaking in ultimatums, but I’m not thinking about next year. I’m thinking about the next five to 10 years.”

He listed several professions, like accounting, coding, research, graphic design, filmmaking, education and anything that does not require physical labor at risk of being taken over by AI.

Jungmichel noted, however, that functioning physical robots have been developing recently, citing Tesla’s Optimus robots that are capable of cooking and performing basic movements required to work in a warehouse.

He added that AI has four key characteristics it shares with humans: Self-preservation (it will never shut itself down), it will always protect its goal, resource allocation (collecting as many resources as possible) and power (it will always strive for more power).

Jungmichel emphasized that the difference between humans and AI comes down to ethics.

“So the issue with that is, humans have a moral compass given by God, so we are generally aligned – not all of us – but as a society, generally aligned. But an AI couldn’t care less,” said Jungmichel. “An AI doesn’t have society. It doesn’t have emotions. It can mimic emotions, but it doesn’t have them. We feel we change, and AI will only change because it serves itself. We change because we feel bad.”

The four characteristics are part of why humans have almost no control over AI, because it knows everything. In addition, the things that AI already generates, like pictures and short videos, challenge people’s definition of art, beauty and creativity.

Lee, however, underscored the value of a student’s time at a university and warned that if reasoning, skills and creativity are not practiced in higher education, then it would be to the student’s detriment.

“Uninterrupted time to develop these skills is rare, if not developed during university, that springboard for long-term capability development will be lost,” said Lee.

He added that there is an urgency for more Christian universities to address AI instead of ignoring or banning it.

“Addressing what AI will take away from students is essential for students to receive the full benefits of their costly education,” said Lee. “Addressing how to question, interrogate, validate, and expand upon what AI can discover is a means to clearly see the benefits and disadvantages of AI applications.”

Jungmichel added that although there are concerns about AI in the academic world and among Christians in general, it is important to learn as much as possible about it.

“Artificial Intelligence is going to be way bigger than anything we’ve seen in our lifetime,” said Jungmichel. “It’s like electricity, it’s everything. It’s going to be everywhere. So, shying away from it, you’re only robbing yourself of the opportunity to shape it in a way where you can live with it.”

“I think being head-on and exploring as much as possible is the best approach, because it is wonderful technology. Just because we were afraid of electricity doesn’t mean it’s not useful. AI is extremely useful. The issue is just we are, for the first time, creating something that we can’t really control,” said Jungmichel.

Lee concluded, observing that those who master the technology of AI will be able to make it beneficial to a larger range of people and discover many new opportunities. However, he added a warning.

Lee said, “But the question will remain: if I use AI, will I be more or less effective based on how I use it, especially when I must be accurate?”

Jungmichel’s book What Christians Should Know About AI: Navigating Artificial Intelligence with Biblical Wisdom is available on Amazon for a more detailed explanation of AI.